While getting ready to upgrade our vCenter from 6.0 to 6.5 and SRM from 6.1.1 to 6.5 we faced an issue were the SRM Embedded DB Admin Password was not documented.

Follow the steps to reset your forgotten password -

1) You will need to edit the pg_hba.config file under -

C:\ProgramData\VMware\VMware vCenter Site Recovery Manager Embedded Database\data\pg_hba.conf

We had our install on the E:\ drive but the data folder was nowhere to be found.

So I started searching for the pg_hba.config file and found it under -

C:\ProgramData\VMware\VMware vCenter Site Recovery Manager Embedded Database\data

2) Make a backup of pg_hba.config

3) Stop service -

Display Name - VMware vCenter Site Recovery Manager Embedded Database

Service Name - vmware-dr-vpostgres

4) Edit pg_hba.config in Wordpad.

5) Locate the following in the file -

# TYPE DATABASE USER ADDRESS METHOD

# IPv4 local connections:

host all all 127.0.0.1/32 md5

# IPv6 local connections:

host all all ::1/128 md5

6) Replace md5 with trust so the changes look like the following -

# TYPE DATABASE USER ADDRESS METHOD

# IPv4 local connections:

host all all 127.0.0.1/32 trust

# IPv6 local connections:

host all all ::1/128 trust

7) Save the file and start VMware vCenter Site Recovery Manager Embedded Database service.

8) Open command prompt as Administrator and navigate to -

E:\ProgramData\VMware\VMware vCenter Site Recovery Manager Embedded Database\bin

Note: You might have installed in a different drive.

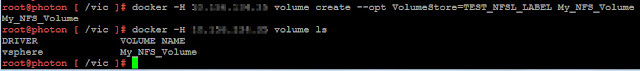

9) Connect to the postgres database using -

psql -U postgres -p 5678

This will bring you to a prompt - postgres=#

Note: 5678 is the default port. If you chose a different port during installation, replace it

accordingly. If unsure open ODBC and check under System DSN.

10) Run the following to change the password -

ALTER USER "enter srm db user here" PASSWORD 'new_password';

Note: srm db user can be found under ODBC - System DSN. new_password should be in single quotes.

11) If the command is successful, you will see the following output - ALTER ROLE

12) Your password has now been reset. Now you can take a backup of the Database.

Note: You cannot take a backup without resetting the password because it will prompt you for a password 😊

13) To take a backup run the following -

pg_dump.exe -Fc --host 127.0.0.1 --port 5678 --username=dbaadmin srm_db > e:\

destination_location

14) Revert the changes made to the pg_hba.config file (Replace trust with md5 or just replace the file you previously backed up.

15) Restart the VMware vCenter Site Recovery Manager Embedded Database service.

16) Go to Add/Remove Programs, select Vmware vCenter Site Recovery Manager and click Change.

17) Be patient it takes a while to load. On the next screen select Modify and click next.

18) Enter PSC address and the username/password.

19) Accept the Certificate

20) Enter information to register the SRM extension

21) Next choose if you want to create a new certificate or to use existing. Mine was still valid so used the existing one.

22) And finally you enter the new password you reset in Step 10.

23) Once the setup is complete. Make sure the VMware vCenter Site Recovery Manager Server service started. If not, manually start it.