- VMFS virtual disks (VMDKs), mounted as formatted disks directly on container VMs. These volumes are supported on multiple vSphere datastore types, including NFS, iSCSI and VMware vSAN. They are thin, lazy zeroed disks.

- NFS shared volumes. These volumes are distinct from a block-level VMDK on an NFS datastore. They are Linux guest-level mounts of an NFS file-system share.

VMDKs are locked while a container VM is running and other containers cannot share them.

NFS volumes on the other hand are useful for scenarios where two containers need read-write access to the same volume.

To use container volumes, one must first declare or create a volume store at the time of VCH creation

You must use the vic-machine create --volume-store option to create a volume store at the time of VCH creation.

You can add a volume store to an existing VCH by using the vic-machine configure --volume-store option. If you are adding volume stores to a VCH that already has one or more volume stores, you must specify each existing volume store in a separate instance of --volume-store.

Note: If you do not specify a volume store, no volume store is created by default and container developers cannot create or run containers that use volumes.

In my example, I have assigned a whole vSphere datastore as a volume store and would like to add a new NFS volume store to the VCH. The syntax is as follows -

$ vic-machine-operating_system configure

You must use the vic-machine create --volume-store option to create a volume store at the time of VCH creation.

You can add a volume store to an existing VCH by using the vic-machine configure --volume-store option. If you are adding volume stores to a VCH that already has one or more volume stores, you must specify each existing volume store in a separate instance of --volume-store.

Note: If you do not specify a volume store, no volume store is created by default and container developers cannot create or run containers that use volumes.

In my example, I have assigned a whole vSphere datastore as a volume store and would like to add a new NFS volume store to the VCH. The syntax is as follows -

$ vic-machine-operating_system configure

--target vcenter_server_username:password@vcenter_server_address

--thumbprint certificate_thumbprint --id vch_id

--volume-store datastore_name/datastore_path:default

--volume-store nfs://datastore_name/path_to_share_point:nfs_volume_store_label

nfs://datastore_name/path_to_share_point:nfs_volume_store_label is adding a NFS datastore in vSphere as the volume store. Which means when a volume is created on it, it will be a VMDK file which cannot be shared between containers.

Add a NFS mountpoint to be able to share between containers. You need to specify the URL, UID, GID and access protocol.

Note:You cannot specify the root folder of an NFS server as a volume store.

The syntax is as follows -

Note:You cannot specify the root folder of an NFS server as a volume store.

The syntax is as follows -

--volume-store nfs://datastore_address/path_to_share_point?uid=1234&gid=5678&proto=tcp:nfs_volume_store_label

Two things to note in my example-

1) I am running a VM based RHEL backed NFS and the UID and GID did not work for me.

2) The workaround was to manually change permissions on the test2 folder on the NFS Share point by using chmod 777 test2

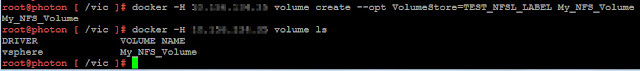

Now that the NFS volume store is added, lets create a volume -

Next, deploy two containers with My_NFS_Volume mounted on both.

To check if the volume was mounted docker inspect containername and you will see the details under Mounts. You will see the name as well as the read/write mode.

Now lets create a .txt file from one container and check from the other if it can be seen.

This concludes we can share NFS-backed volumes between containers.

1) I am running a VM based RHEL backed NFS and the UID and GID did not work for me.

Now that the NFS volume store is added, lets create a volume -

Next, deploy two containers with My_NFS_Volume mounted on both.

To check if the volume was mounted docker inspect containername and you will see the details under Mounts. You will see the name as well as the read/write mode.

Now lets create a .txt file from one container and check from the other if it can be seen.

This concludes we can share NFS-backed volumes between containers.